Auditing Time-Based Tracking Events

Time spent on page was never a perfect metric.

When performing calculations for time spent on page, most out-of-box analytics implementations still rely on flavors of this formula:- Take the delta between current page load and next page load in the visit/session.

- Average deltas across all sessions that saw the page in question plus one more.

- Sessions which end on this particular page are excluded from the average (there is no next page delta to consider).

The advent of Single Page Applications (SPAs) and the proliferation of web-optimized video content are dictating the need for a better set of metrics to describe the time visitors spend on a given content item. In highly dynamic and highly media-rich sites, almost all sessions are soft bounces: visitors load one page, consume content for a while, and then leave. Relying solely on the legacy formula would produce highly inaccurate metrics. And measuring the time spent engaging content on such sites is often among the most important key metrics.

As a result, many analytics implementations have evolved to an enhanced formula:

- When a visitor lands on a page, begins playing a video, or engages content in some other significant way (often referred to as "calls to action") the tracking javascript is told to start sending out pings at specific time intervals.

- Using the information provided by these pings, the analytics framework is able to measure view times in a much more accurate way.

Automated audits of timer-based events are as tricky as they are necessary.

Many tag auditing solutions have evolved correspondingly in order to be able to QA timer-based beacons whose manual validation would otherwise be a major drag. But because of technical challenges, the ease of automation varies greatly.Here's a quick look at how easy it is to automate the detection of timer pixels using QA2L's click-and-point web interface:

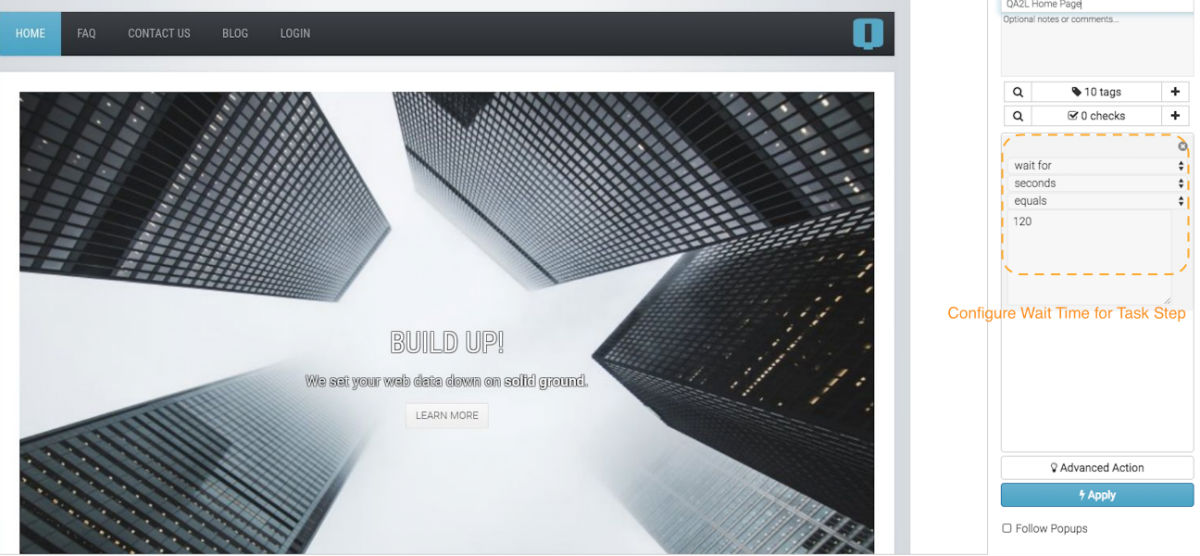

- Load a page in our design interface, click on "Advanced Action" and select "wait for" from the list available options. In this example we'll wait for a total of 120 seconds.

- Waiting for 120 seconds will trigger various timer-based events set at the following times: 6, 30, and 120 seconds. QA2L will detect these timer requests and you will be able to add checks for the parameters that have been generated.

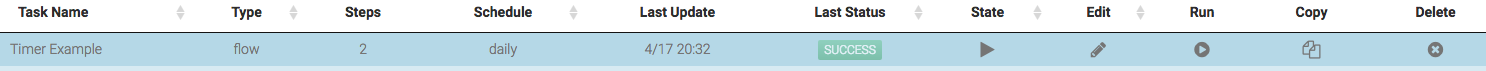

- The task can be saved and scheduled to run autonomously, ensuring that your timer based events are always collecting the expected data.

It gets even trickier...

The above is actually the simplest test scenario. Often, specific tracking timers are set off by video playback, or even scrolling down to a certain portion of the page. Often, there is no good way to know when an asynchronous event (such as an API request) has completed so you can start or stop listening for timer-based events.

Luckily, QA2L excels at automating all of these and many other advanced test cases.

Suggested further reading: User Acceptance Testing Automated!