All That Data Is Not Gold

Data is the new gold, they say... It's also been referred to as Black Gold, heralded as the most valuable asset a company may have, even used in the same sentence as "Fort Knox".

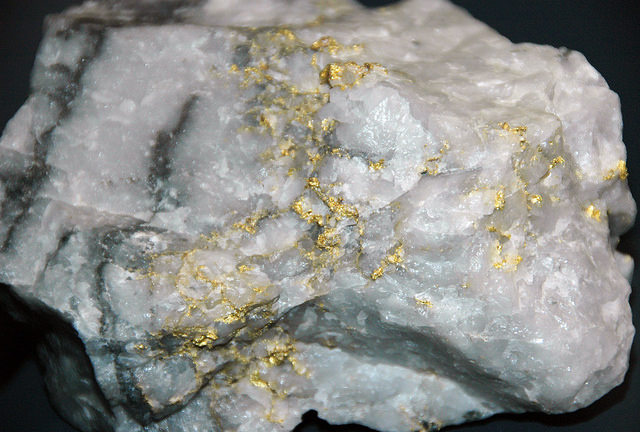

We live in the times of the Big Data gold rush, that much is certain. But when I think about data and its value, it's not shiny gold bullion bars that spring to mind, it's raw ore. With some veins of gold, if I'm feeling optimistic.

The tiny gold veins, much like the nuggets of insight contained in your raw data, are mixed in with the mass of rubble. Only hard work will extract any gold flakes from it.

In the early days prospectors would usually have to crush each quartz piece, turning it into a fine sand of mostly quartz, sulfide, iron stains, traces of pyrite, and (if they are lucky) gold. Then they would patiently pan through the mix to separate the gold specs. They would use rough tools such a primitive stone crushers, pans, and meshes of different sizes. For each gold gram, hours would be spent crushing and sifting through kilos of quartz rock.

The nature of your data is no different. For each order on your website there are thousands upon thousands of page views, visits, and visitors. For each new account, there are splash pages, content pages, special promo pages, social content, retargeting campaigns, user comments, sharing buttons,... the list is endless. Much like the quartz specimen, the data is all intertwined. One needs specialized cookies, customized time stamps, individual campaign codes, tags of specific syntax to stitch all this data together and attribute it to the correct marketing channel, promo code or user id.

In a Forbes article titled "The Big Data Gold Rush", Brad Peters writes:

"That’s a lot of information. But here’s the thing: buried in that Himalayas of data will be some incredibly valuable information"

Did I say expecting to find a gold vein in a single lump of quartz is optimistic? Most often, the reality is that insight is hidden not in a fist-sized rock but in a mountain of data you need to sift through. We need a more accurate slogan: "All That Data is not Gold, but maybe there is Gold in the Data".

This raises a number of questions:

1. Do we treat all data equally? (No)

2. In the context of quality, are all data sources and data points given the same weight / priority? (No)

3. Are some user actions, like a purchase, almost infinitely more important than others, like clicking 3 times on the "About us" page? (Yes)

4. When it comes to QA-ing data quality, do we focus on the key metrics and dimensions, making them the primary goal of our QA efforts (Heck, yes)

So how do we go about it? Do we treat the mountain of analytics data generated by your website as one single unit, crush it up in our ginormous rock crusher to then sift through all the rubble and run looking for precious nuggets?

Some tagging QA tools have certainly gone down that road. They offer to spider each one of your pages, giving all pages the same priority and performing the same basic checks. When and if they reach your really important pages (shopping carts or booking forms, user registration flows), they often choke, unable to deal with user authentication or dynamic form inputs.

Crawling QA tools generate their own mountain of data, giving an illusion of control and accomplishment. You will end up with tagging hierarchies (for whatever that's worth) and a report that tells you Google Analytics was found on 93% of your pages (you forgot to tag the "Investors Relations" page!). But you still wouldn't know if your key site flows and their corresponding KPI's are being tracked correctly, now, today, always.

Because not all data is created equal, the approach to data quality assurance should be fundamentally different. Instead of trying to grind down mountains, we should target the veins early on. The key flows on your site and the metrics they generate should be the first stop for QA. Maybe even the second and third stop. And wouldn't it be great if all these tools made it easier to monitor key flows instead of all that's ever flowed?

Developing a targeted plan for the smart and efficient deployment of an automated QA tool does not have to be hard:

1. Identify your key metrics and the flows which generate them.

2. Create corresponding test cases performing sequences of user actions.

3. Identify the tags that drive key metrics and create specific checks for the parameters which carry essential information.

4. Schedule the test cases to run periodically.

5. Get alerts when data collection deviates from the expected values.

Not sure where to start? Contact us and we'll help you down the path of key data validation using QA2L.