Tagging Specs Like Never Before

The dreaded tagging specification goes by many exotic names and wears many strange faces.

A full gamut would span 50MB Word documents, notes and code awkwardly stuffed in spreadsheet cells, website screenshots splashed across lengthy email threads, and of course PowerPoints as the most natural fit for the job!

Each agency brings their own flavor to the table, organizations may adopt and adapt one to their unique needs, individual analysts further modify it to match their own taste, their own sense of standards. The sum effect of it all is there are NO standards.

At QA2L we have devised a new method for addressing this challenge. Some of the requirements that we considered when rethinking the Tagging Specification included:

- Specs should follow a uniform standard.

- Specs should be visual, intuitive, and easy to understand by analysts, developers, and business users alike.

- Specs should be closely and seamlessly integrated into the full analytics life cycle—from requirements gathering, implementation and initial QA to ensuring the ongoing data quality and governance.

We'll demonstrate these points by doing a short role playing exercise involving a business stakeholder, an analyst, and a developer:

Business User: "I am interested in tracking how many people land on our new Stories page and go on to read a travel story."

Analyst: "Sure, let's take a look at the steps in this flow and what we need to track for each."

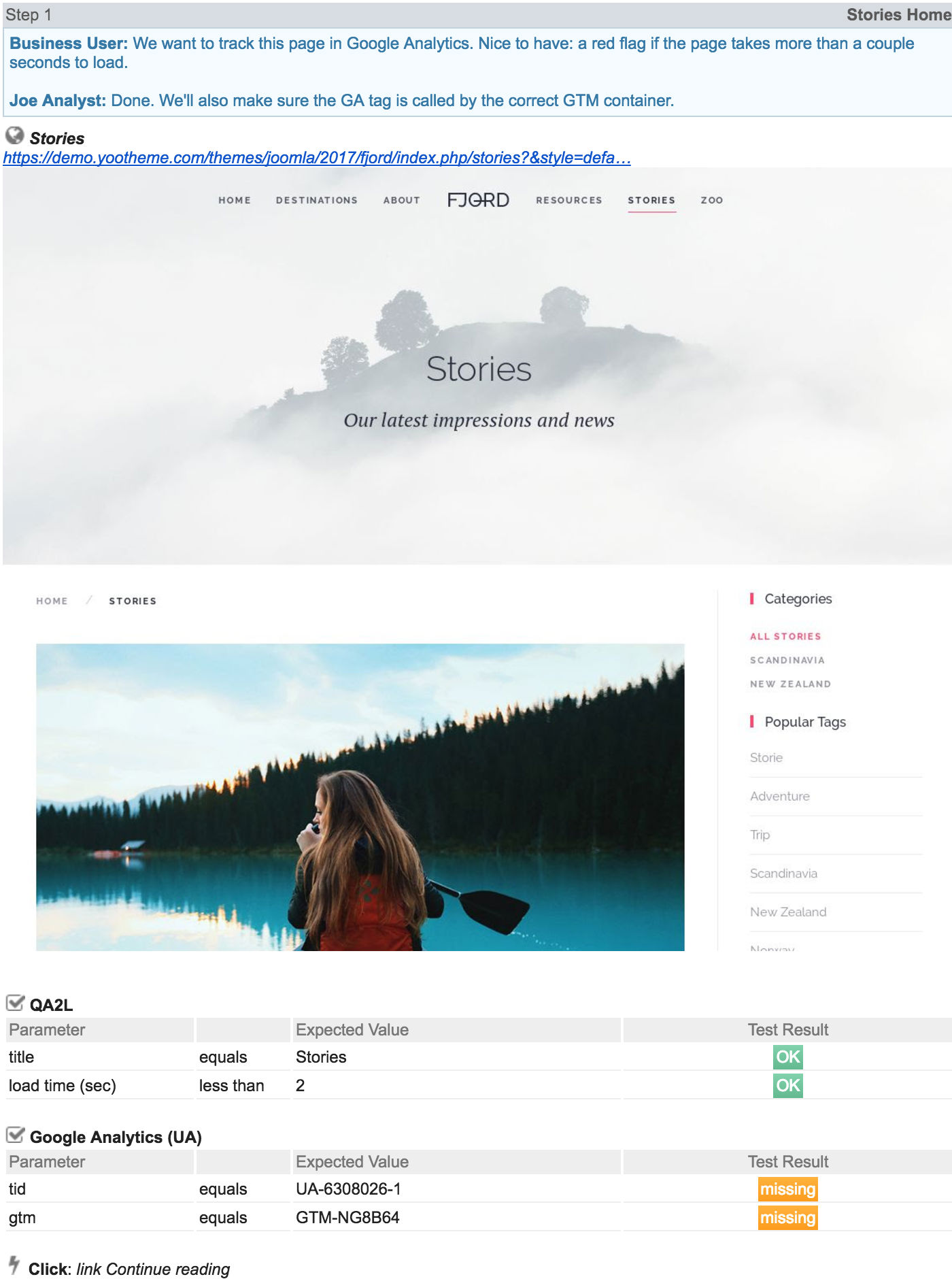

Using QA2L.com's visual flow technology, even the (less technical) Business User has no trouble stepping through the flow of landing on the new site page and performing a Read More click. He and the Analyst annotate the steps, working together towards their tracking goals:

In response to the Business User's reporting requests, the Analyst defines specific checks for data tags that will fulfill these requirements.

On the first step, the Analyst uses the QA2L tag to run a quick check against page load times. He then defines a check for a Google Analytics tag that doesn't yet exist on the page. When it is added, this check will pass, (and will continue to pass until and if that tracking breaks).

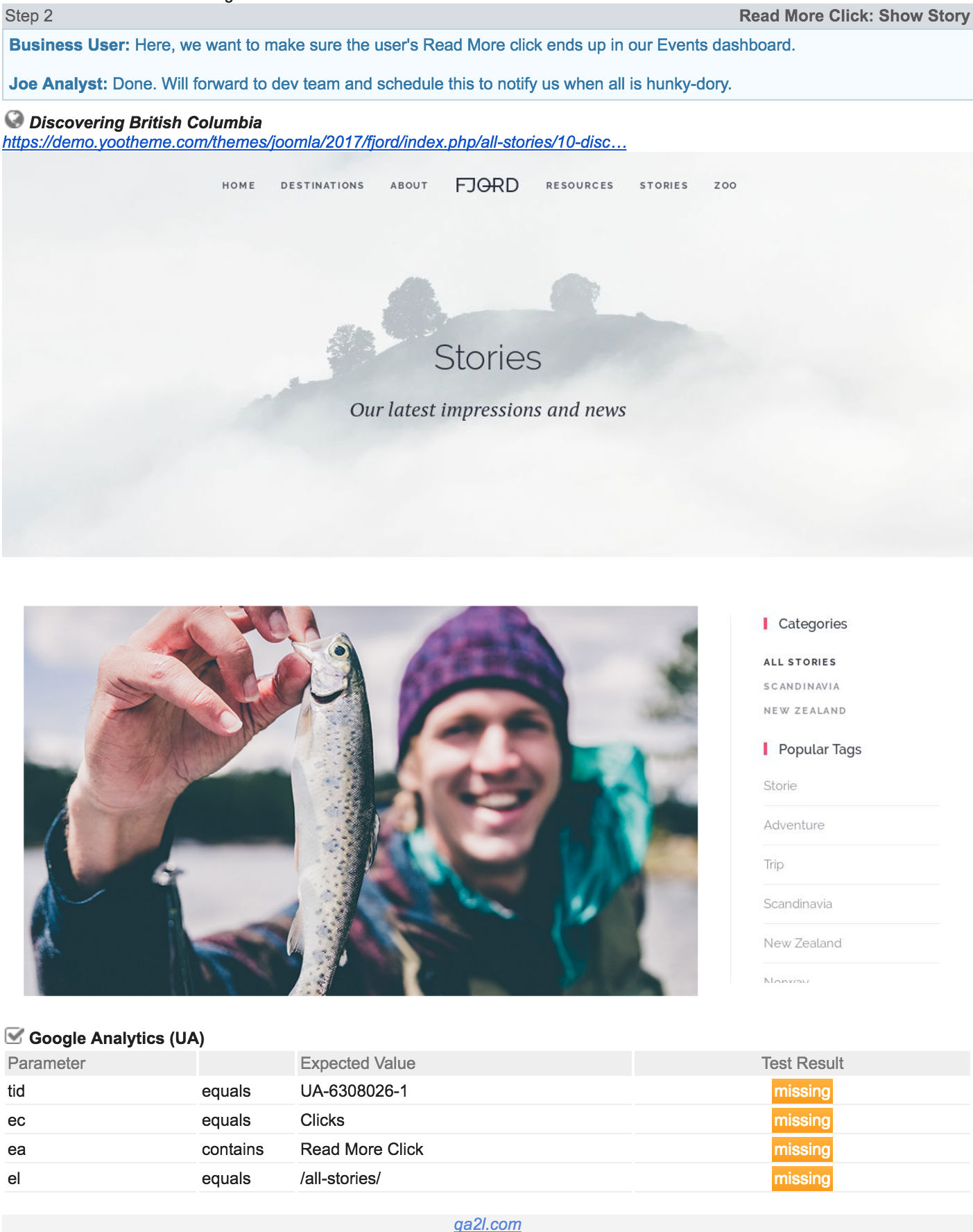

On the second step, the Analyst wants to see GA Event Category, Action, and Label tags with specific values. He can define these in QA2L together with a comment or any additional instructions for the Developer or GTM Specialist. Since the tags are not on the page, they will show up as missing for the time being. If the Analyst doesn't want to have to keep checking back whether the tags have appeared, he can schedule the task to be run automatically at a given interval.

The Developer is notified about the requirements (e. g. cc'ed in an email showing the output of a task run) and automatically has highly visual and well-structured implementation notes about the new tracking requirements. If she has access to QA2L, she can even validate any implementation herself!

At any point in this process, the task can be scheduled to perform automated checks at a given interval. This not only speeds up the initial implementation process, it bullet-proofs the tagging for any ruinous updates that may come in the future, and adds to a growing library of automated QA processes. This is test-driven development (TDD) in its purest form.

The same approach can be used to implement and validate any vendor tags or custom data layer elements. As an added bonus, analytics QA automation is likely to catch general issues with the functionality of the site itself, helping developers catch major issues that may prevent users from even completing the flow that the task is validating.

To sum up, organizing the tagging specification and implementation workflow around the creation of an automated QA task results in measurable efficiency gains:

1. By enforcing a consistent standard for documenting and collaborating on new tracking requirements, less time is spent deciphering document formats and taking screenshots.

2. Tracking the implementation progress becomes a breeze rather than a chore.

3. The end result is not just correct implementation at launch, but also a capability for ongoing QA, freeing up human resources over time.

In related news, using a Slack App can ensure that this process feeds into some of the latest standards in team collaboration. Curious to try it out?

Tags: Data Governance Product News