Native App Tag Validation for the Faint of Heart

The difficulties of tagging validation for web properties have gotten a lot of ink, but the challenges pale in comparison to validating tracking within native mobile applications. In fact, any comparisons are flawed at best. Mobile apps are not a different breed; they're a different species.

In this blog post, we decided it would be helpful to go over the most common native app tag validation scenarios and in doing so, outline some new methods for rising up to the challenge.

The scenarios we'll focus on are all concerned with the more commonplace client-side tracking implementations. It is not unheard of for some apps to be tracked entirely server-side, in which case validation is even more painful, requiring advanced custom logging or other techniques such as directly checking the final reporting platform (for more on that, read our blog post on real-time validation of data in Adobe Analytics).

All good things come in threes, so the scenarios we'll cover are:

1. A newly-tagged app.

2. An app that has been re-tagged to address previously known tagging defects.

3. A complete regression test of a new app build.

Scenario 1: "Congratulations! You native app was just tagged. Or was it?"

You've managed to shepherd the project through interviewing business stakeholders, identifying the KPIs, writing the tagging requirements, and now your dev team has told you the good news that the tags have been implemented. You have your work cut out. Here is a list of the things you'll need:

- Obtain a build of your newly tagged app.

- Install the build on the devices from which you'd like to test.

- Install a proxy on your computer (the most common ones include Fiddler or Charles).

- Configure the proxy to intercept the network requests made by your mobile device(s).

- Go through the various key journeys on your app, inspect the generated tracking requests.

- Document the individual tag values, referencing the values prescribed in your tagging plan.

While fairly straightforward, many of these steps have logistical dependencies:

- Perhaps you did not install the correct build.

- Your network setup coupled with VPN configurations make the proxy settings tricky.

- You don't have the device you need in order to test on all platforms.

- Documenting the results can be also manual and prone to human-error.

Scenario 2: "Devs fixed all known tag defects, please QA again (and again)."

It is not uncommon that the initial validation you performed will uncover tagging deficiencies. The tagging defects will need to be corrected by your Dev teams and they will produce a new build for you to revalidate. You will need to step through the same sequence of steps identified above, verifying the newly-fixed tags and regression-testing any of the legacy tags that were previously working.

This stage of the process carries all the dependencies we have outlined before and you might have to iterate often depending on the complexity of the tags and the quality of the work produced by the various Dev teams. The documentation aspect of this process can be tricky if it requires maintaining multiple versions of the documentation for different builds of the apps shared across different team members.

Scenario 3: "We released a new build, can you confirm the tags are still working?"

Your native apps will go through various iterations in their lifetime, from small-scale design changes to complete overhauls. Smaller changes may not require any updates to the tagging plan, but in preparation to larger-scale modifications, you may have to produce new tagging specifications to reflect changes/additions to the app's features.

Your native apps will go through various iterations in their lifetime, from small-scale design changes to complete overhauls. Smaller changes may not require any updates to the tagging plan, but in preparation to larger-scale modifications, you may have to produce new tagging specifications to reflect changes/additions to the app's features.

In either case you will need to perform regression tests when the new builds become available, facing the same set of challenges as in any of the other stages outline earlier:

- Multiple dependencies to even begin validation

- Highly manual process, often requiring multiple rounds of tagging revisions

- Documentation inconsistencies

The main take-away is that—regardless of where in the process you're at—validating tags issued by native apps can be cumbersome and inefficient, jeopardizing the success of the marketing technology you are trying to enable.

What we've done to make things easier:

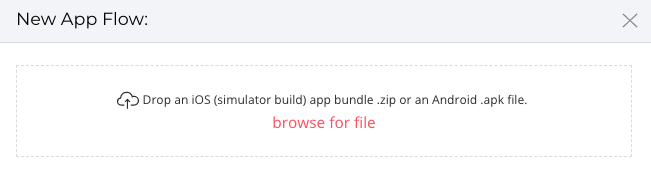

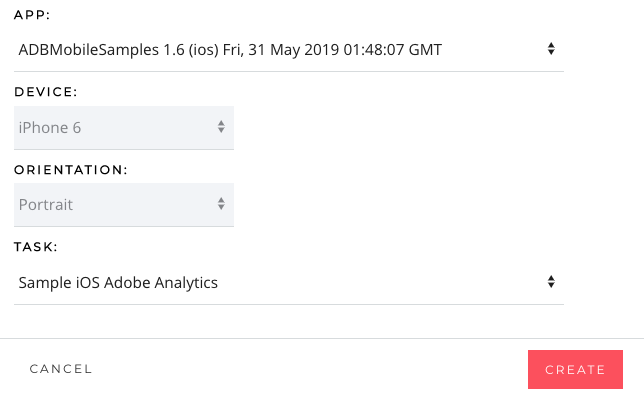

1. To kick things off, we have greatly simplified the process of getting a new app build ready for testing. QA2L allows you to easily upload an iOS simulator build app bundle .zip or an Android .apk file to your account and get it running within a minute. No more woes with provisioning actual devices to run special test builds deployed by specialized services:

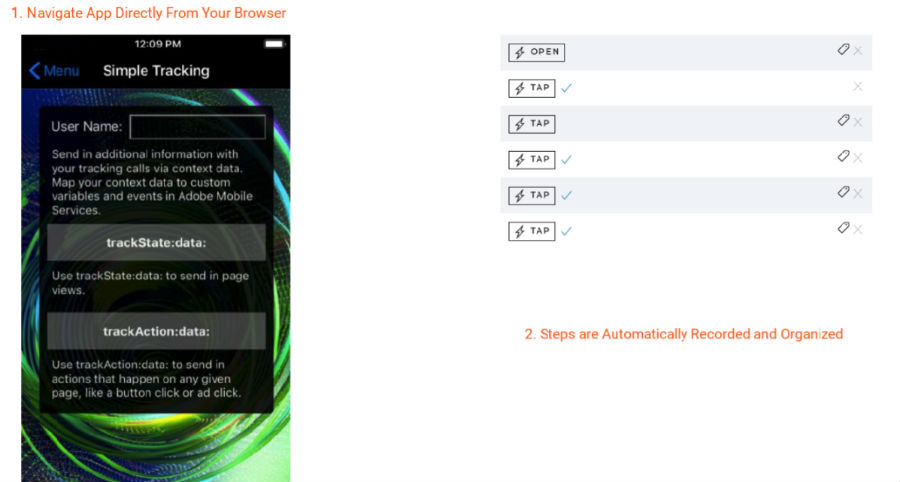

2. Once the app is uploaded, our interface allows you to navigate it directly in your browser, recording each interaction and automatically collecting the tracking calls that fired along the way. This eliminates the need to mess with your network settings, install proxy tools, spend precious time scouring hundreds of network requests to identify the ones you care about:

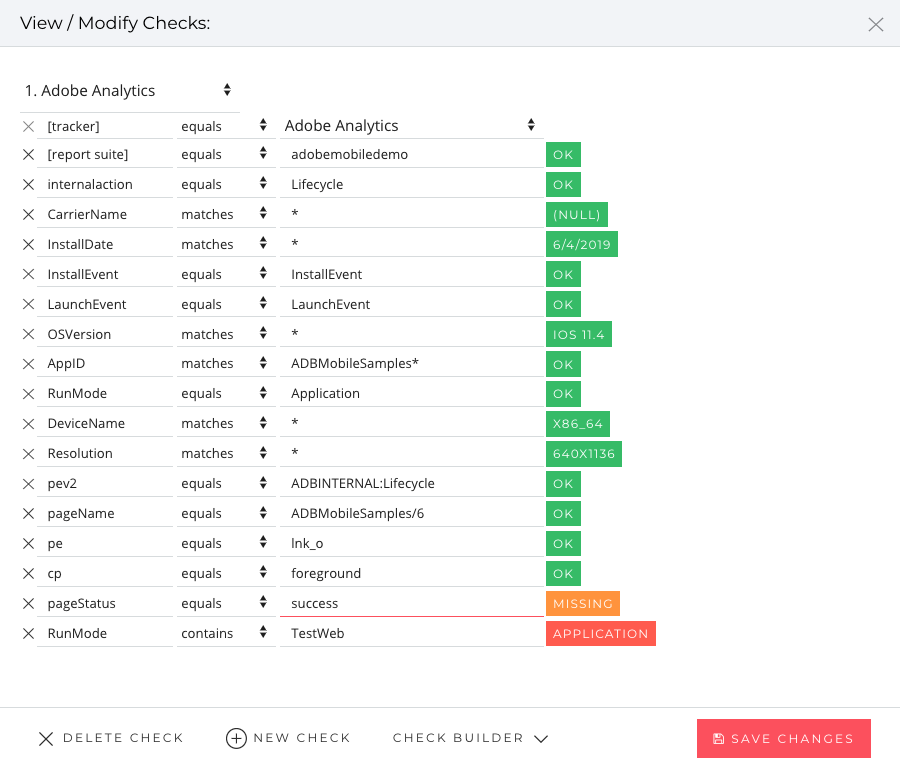

3. Our tool then allows you to record checks using a robust set of rules against any of the tracking parameters. This feature can save you a ton of time when you need to perform the same checks in a series of regression tests, e. g. It also naturally helps with consistency and documentation, resulting in better long-term governance.

4. The set of steps and checks you have recorded are organized in tasks. With a single click, you can swap the build with the most recent version provided by your dev team and rerun the tasks, thus fully automating repeatable validation steps:

This new setup eliminates many of the cumbersome, manual, and error-prone aspects of app validation. The automation aspects ensure significant time savings and guarantee that your key app journeys will be consistently tracked.

As an added bonus, when your automated tests run, QA2L will produce a best-of-breed record of the validated tags, delivered directly to your email or collaboration platforms such as Confluence, Slack, and Teams.

Curious to see it in action? Contact Us for a Demo.